As enterprises race to adopt artificial intelligence, one of the biggest challenges is integrating modern AI solutions with decades‑old technology stacks. Core transaction systems built in COBOL or Java may run mission‑critical workloads, while cloud‑based AI services promise automation and insight. Without a plan, bolting these two worlds together can create more problems than it solves. This guide explains how to evaluate your legacy infrastructure, prepare your data and architecture, and adopt modular strategies that allow artificial intelligence to coexist with existing systems without disrupting day‑to‑day operations.

Ready to implement AI?

Get a free audit to discover automation opportunities for your business.

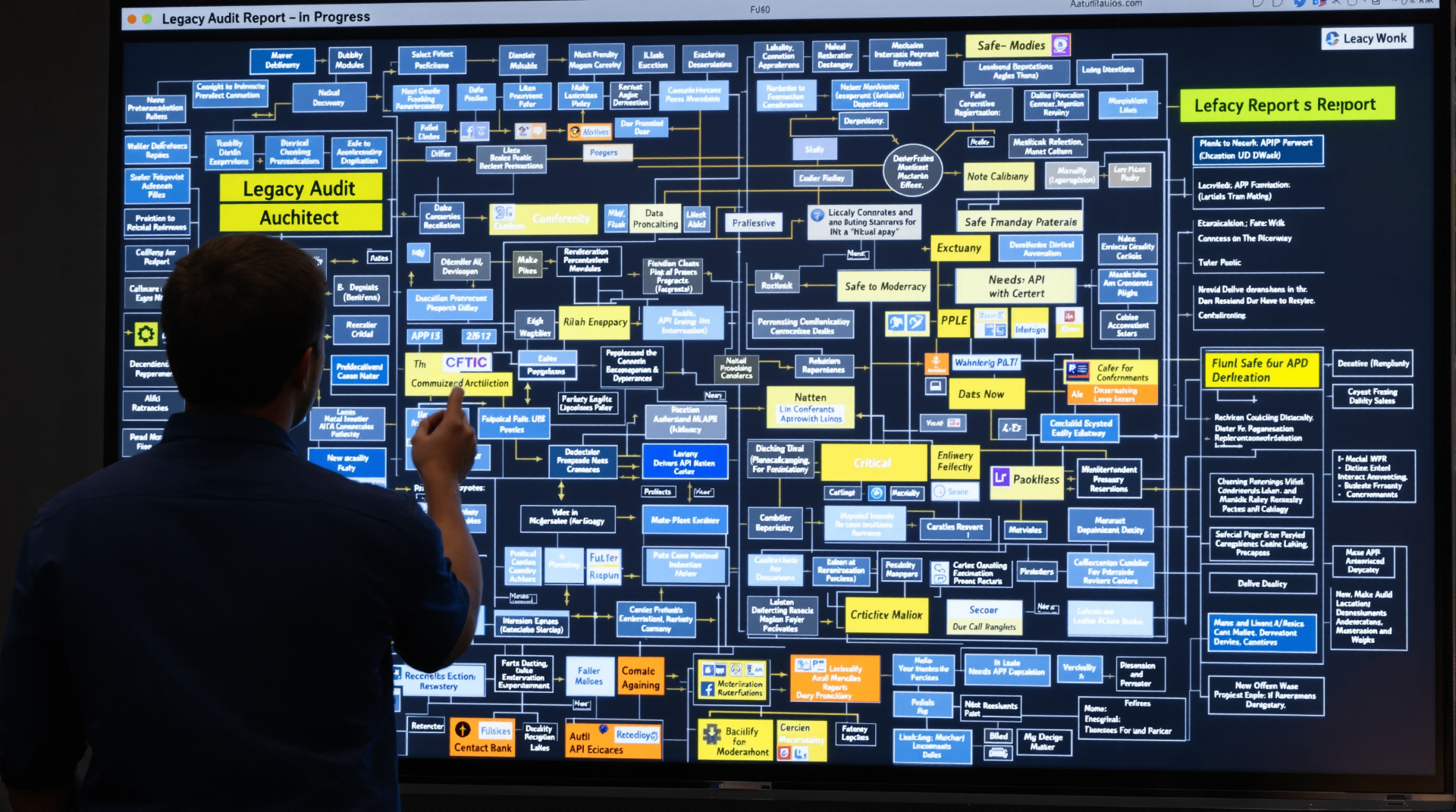

1. Start with a thorough assessment

Before any integration, conduct a detailed audit of your existing systems. Identify which applications are mission critical, which can be decoupled and modernised, and where data lives across databases, file systems and third‑party platforms. Look at the technology stack – languages, frameworks, interfaces – and map dependencies to avoid breaking downstream processes. This exercise helps set realistic integration goals and highlights where AI can add value without exposing core systems to unnecessary risk.

2. Prepare data and infrastructure

Artificial intelligence thrives on high‑quality data. Legacy systems often store information in multiple formats and locations, so building a unified data layer is essential. Extract, transform and load (ETL) pipelines can cleanse and standardise records, while a central data lake or warehouse provides a scalable home for training and inference. Companies should also evaluate whether their on‑premises infrastructure can handle AI workloads or whether a hybrid or cloud‑native approach would be more cost‑effective. Investing in scalable storage, GPU‑enabled compute and secure networking lays the foundation for reliable AI services.

3. Use modular and phased integration

Instead of a big‑bang replacement, wrap legacy systems with modular components. Application programming interfaces (APIs), microservices and middleware make it possible to insert AI modules without altering core code. For example:

API wrappers allow AI models to call and be called by existing applications, translating between new formats and legacy interfaces.

Microservices separate specific functions – such as recommendation engines or fraud detection – from monolithic applications so they can be updated independently.

Data connectors synchronise information between legacy databases and modern vector databases that support retrieval‑augmented generation (RAG) and other AI paradigms.

Phased rollouts minimise risk. Begin with a pilot in a non‑critical process, monitor performance and refine integration. Once stable, extend the AI service to additional workflows. Continual testing and version control ensure that the legacy system continues to operate even as new functionality is introduced.

4. Enable real‑time knowledge with RAG and databases

Large language models trained on static data often "hallucinate" or miss recent information. Retrieval‑augmented generation solves this by combining an LLM with a search component that pulls facts from a company's knowledge base at runtime. To support RAG, organisations must integrate their AI models with databases, knowledge graphs and document stores. This integration requires connectors that fetch documents, vectorise them and feed them into the AI model. Proper indexing and caching ensure low latency, while governance rules prevent access to sensitive data.

5. Address privacy and security

Legacy systems frequently contain sensitive customer and transaction data. When introducing AI, apply the same—or stronger—security controls. Encrypt data at rest and in transit, implement role‑based access controls, and monitor how third‑party AI providers use and store information. Where possible, process personally identifiable information on premises rather than in public clouds. Compliance with regulations such as GDPR, HIPAA or local privacy laws is non‑negotiable, and security teams should review AI models for potential leaks of proprietary information.

6. Leverage the agentic AI trend responsibly

Agentic AI refers to systems that can plan, remember past interactions and act autonomously using integrated tools. While powerful, agentic architectures add complexity to legacy integration. They often rely on orchestrators that call multiple models, connect to APIs and manage workflows. To use them safely, organisations must design a clear task hierarchy, establish guardrails for autonomous actions, and ensure human oversight for high‑impact decisions. Using modular architecture and robust monitoring helps these systems operate in harmony with existing technology.

7. Invest in governance, monitoring and retraining

Integrating AI into legacy systems is not a one‑time effort. Models drift, data changes and business requirements evolve. Establish governance processes to regularly audit model behaviour, retrain on fresh data and measure business impact. Monitoring tools should track response times, accuracy, cost and user feedback. When issues arise, be prepared to roll back updates without compromising legacy operations. A cross‑functional team of data scientists, engineers and business stakeholders should own the lifecycle of AI components.

Conclusion

Integrating modern AI into legacy systems can unlock enormous value, but only when approached with discipline and foresight. A thorough assessment of the current architecture, investment in data and infrastructure, and a modular, phased integration strategy allow companies to adopt AI without disrupting core processes. By embracing retrieval‑augmented generation, enforcing robust privacy and security practices, managing emerging agentic AI patterns, and continuously monitoring and retraining models, organisations can modernise their operations without chaos. Thoughtful integration turns AI from a disruptive force into a dependable asset.